Sesame, a leading AI company, has open-sourced the base model behind its remarkably realistic voice assistant, Maya. The model, known as CSM-1B, boasts 1 billion parameters and operates under an Apache 2.0 license, allowing for broad commercial use with minimal restrictions.

CSM-1B generates RVQ (Residual Vector Quantization) audio codes from both text and audio inputs, as detailed in Sesame’s listing on the AI development platform Hugging Face. RVQ is an advanced encoding technique that converts audio into discrete tokens, a method also used in AI audio solutions like Google’s SoundStream and Meta’s Encodec.

This model is built using a foundation from Meta’s Llama family and includes an audio decoder to produce realistic speech outputs. While the base version of CSM-1B can generate diverse voices, Sesame confirms that Maya operates on a fine-tuned variant of this model.

Open-Source Availability and LimitationsSesame describes CSM-1B as a “base generation model”, meaning it hasn’t been trained on specific voices. However, due to unintended data contamination, the model may have some limited capabilities in non-English languages.Despite its open-source release, Sesame has not disclosed the dataset used to train CSM-1B. This raises questions about its underlying data sources and potential biases in voice generation.

Ethical Concerns and Security Risks of CSM-1B Voice Model

One of the most pressing concerns is the model’s lack of built-in safeguards. Sesame urges developers to follow ethical guidelines, advising against using the model for:

- Cloning voices without consent

- Creating misleading content, such as fake news

- Engaging in harmful or malicious activities

However, testing on Hugging Face revealed how easily the model can clone voices in under a minute, making it a potential tool for misuse. This aligns with recent warnings from Consumer Reports, which highlighted that many AI-powered voice cloning tools lack strong fraud prevention measures.

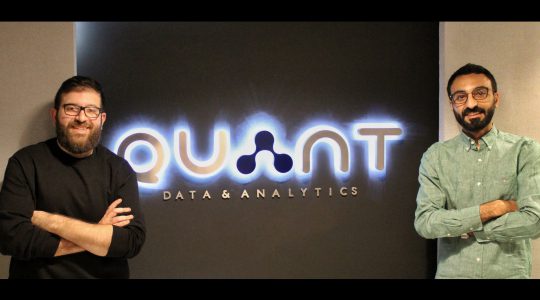

Sesame has gained significant attention in the AI community, particularly after co-founder Brendan Iribe, also known for co-creating Oculus, unveiled its next-gen voice assistant technology.

Maya, along with Sesame’s second assistant, Miles, pushes the boundaries of natural-sounding AI voices.

These assistants can:

- Pause naturally while speaking

- Include subtle speech disfluencies for realism

- Be interrupted mid-sentence, similar to OpenAI’s Voice Mode

Beyond voice AI, Sesame is expanding into wearable technology. The company is developing AI glasses designed for all-day wear, which will integrate its advanced voice assistant models.

Although Sesame has not publicly disclosed its total funding, it has secured investment from major venture capital firms, including Andreessen Horowitz, Spark Capital, and Matrix Partners.

While Sesame’s CSM-1B model represents a leap forward in AI-generated speech, its open-source release raises ethical concerns regarding misuse, deepfake risks, and voice cloning without consent. As AI-generated voices become more lifelike, the industry must address security measures to prevent exploitation.